In the realm of computer science, particularly in the field of caching and memory management, the concept of the Least Frequently Used (LFU) policy holds significant importance. The LFU policy is a cache eviction algorithm that removes the least frequently accessed items to make room for newly added ones. This method efficiently manages memory by keeping the most engaged resources readily available, thus improving system performance and reducing latency. Understanding the operational mechanics and advantages of the least frequently used policy is essential for professionals working in data management and system optimization.

Read Now : “handling Nested Queries In Graphql”

Understanding the Least Frequently Used Policy

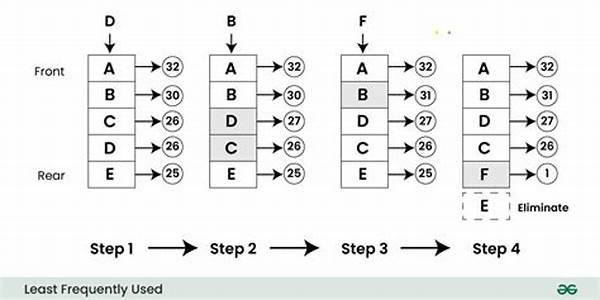

The least frequently used policy is a critical concept in cache management. This algorithm prioritizes items based on access frequency, ensuring the most-used data remains in the cache. Implementing LFU involves tracking the number of accesses per item and purging the least accessed ones when the cache reaches capacity. This method benefits systems with varying data usage levels, optimizing resource allocation by preventing stale data from occupying valuable space. Deploying a least frequently used policy can significantly enhance system efficiency, particularly in environments where data access patterns are unpredictable. By maintaining a dynamically adjusted cache, LFU ensures a responsive system architecture capable of adapting to user behaviors and demands.

Key Features of the Least Frequently Used Policy

1. Access Frequency Prioritization: The least frequently used policy prioritizes data retention based on access frequency, ensuring that cached items are relevant and frequently accessed.

2. Efficient Resource Allocation: By evicting the least accessed data, the LFU policy optimizes memory use, enhancing system performance and minimizing downtime due to cache misses.

3. Dynamic Adaptation: This policy dynamically adjusts to changes in data access patterns, making it suitable for environments with fluctuating usage demands.

4. Enhanced System Efficiency: By keeping frequently accessed data in memory, LFU enhances system speed, reduces latency, and improves user experience.

5. Predictive Data Management: Implementing an LFU policy aids in predicting future access trends based on historical data usage, allowing for preemptive memory management strategies.

Benefits and Challenges of Implementing LFU

The least frequently used policy benefits systems by promoting efficient data management, thereby enhancing operational speed and reliability. This policy is particularly useful in applications with varying access frequencies, as it adapts to usage trends and keeps critical data readily accessible. However, implementing LFU requires maintaining access frequency counters, which can introduce computational overhead. Systems must balance the benefits of optimal data retention with the resources required to track access metrics. Despite these challenges, the advantages of the least frequently used policy in optimizing resource use and improving system response times make it an attractive solution for modern computing environments.

Read Now : Techniques For Originality In Manuscripts

Considerations in Least Frequently Used Policy Deployment

Fundamentally, the least frequently used policy is designed to retain pertinent data by continuously monitoring access frequencies. Implementing LFU involves meticulous tracking, which necessitates additional storage and computation but ultimately supports a more responsive system. This policy is particularly advantageous in environments with erratic access patterns, as it reduces the likelihood of retaining obsolete data. Despite its benefits, the LFU policy must be attentively managed to mitigate the computational demands associated with maintaining frequency counts. Balancing these concerns is essential for harnessing the full potential of the least frequently used policy in dynamic computing landscapes.

Optimizing System Performance with LFU

System optimization via the least frequently used policy involves utilizing data access insights to enhance caching efficiency. By prioritizing frequently accessed data, LFU minimizes memory wastage and maximizes data retrieval speed. This results in a seamless user experience, with reduced processing delays during peak loads. The policy’s adaptability to access trends ensures that systems remain agile, capable of accommodating dynamic workload demands. Moreover, by focusing on retaining presently relevant data, the least frequently used policy prevents outdated information from clogging memory, further emphasizing its strategic value in system optimization. The policy offers an intelligent, data-driven approach to memory management, aligning resource utilization with real-time user and application needs.

In-Depth Analysis of the Least Frequently Used Policy

The least frequently used policy represents a sophisticated approach to cache management, emphasizing relevance through the consistent tracking of access frequencies. This capability ensures that data remains pertinent, thereby enhancing system responsiveness. Implementing this policy involves critical considerations, such as balancing computational overhead with the benefits of reduced latency and improved data availability. As systems scale, the complexity of maintaining accurate access counts necessitates tailored strategies to manage resources effectively. Ultimately, the LFU policy supports an innovative framework for data retention that aligns with dynamic operational demands, reinforcing its role as a cornerstone in efficient system design and performance metrics.

Crafting Effective LFU Strategies

Developing and deploying least frequently used policy strategies requires a thorough understanding of system requirements and data access patterns. By tailoring LFU implementation to specific use cases, organizations can enhance memory efficiency and system throughput. Monitoring tools are essential for assessing the policy’s impact and identifying potential bottlenecks. Effective collaboration between developers and system architects is vital to optimize the LFU policy, ensuring that the approach aligns with strategic objectives. Continuous evaluation and adjustment of the policy are critical to harnessing its full potential, enabling systems to adapt to evolving operational landscapes while maintaining optimal performance.