In the realm of database performance optimization, cache management emerges as a pivotal strategy to ensure efficient data retrieval and reduce latency. It is a sophisticated approach that leverages temporary storage solutions, known as caches, to retain frequently accessed data, thereby diminishing the need for repetitive database queries. This technique not only bolsters system performance but also enhances user experience by delivering swift data access. As applications scale and user demands intensify, implementing effective cache management is indispensable for maintaining seamless database operations.

Read Now : **intuitive Touch-based Navigation Techniques**

The Importance of Cache Management in Database Systems

Cache management for databases plays a crucial role in enhancing the performance and scalability of modern applications. As databases continue to scale, the need for efficient data processing and retrieval mechanisms becomes imperative. Caches serve as an intermediary storage layer that stores frequently accessed data to minimize database access time. By reducing the number of queries hitting the database, caches alleviate congestion and improve system response times.

Moreover, this management approach helps to mitigate the burden on the underlying database systems, allowing them to focus resources on processing complex queries rather than routine data retrievals. Properly implemented cache management for databases can enable organizations to achieve cost-efficiency by reducing the need for extensive database infrastructure upgrades. In summary, cache management not only improves data access speed but also ensures resource optimization, scalability, and overall system reliability.

Implementing sound cache management strategies becomes vital as databases grow in complexity and usage. It is important to consider factors such as cache size, eviction policies, and data consistency to ensure that the caching system aligns with organizational goals and infrastructure. Proper configuration and monitoring of cache systems are essential to reap the full benefits of cache management for databases. A comprehensive understanding of application behavior and access patterns empowers database administrators to optimize cache usage, thereby ensuring consistent performance improvements across the board.

Five Key Benefits of Cache Management for Databases

1. Enhanced Performance: Cache management for databases significantly boosts performance by reducing the load on the database, enabling faster data retrieval, and minimizing response times.

2. Reduced Latency: By storing frequently accessed data, cache management for databases decreases latency, ensuring quick data access for end-users.

3. Scalability: Proper cache management for databases facilitates better scalability, allowing systems to accommodate increases in data volume and user traffic.

4. Improved Resource Utilization: Cache management for databases optimizes resource usage, preventing database servers from becoming bottlenecks during peak demand periods.

5. Cost Efficiency: By lowering the need for frequent database queries, cache management for databases can lead to cost savings in terms of hardware and infrastructure investments.

Strategies for Effective Cache Management for Databases

Developing robust cache management strategies is integral to leveraging the full potential of caching systems in database environments. One effective approach involves identifying critical datasets that benefit most from caching. By analyzing access patterns, administrators can determine which data is accessed frequently and should be prioritized for caching. This prioritization not only enhances performance but also optimizes memory usage by ensuring that only pertinent data is stored in cache.

Additionally, establishing clear eviction policies is pivotal for effective cache management for databases. Common policies such as Least Recently Used (LRU) or First-In-First-Out (FIFO) ensure that outdated or less-used data is purged, making space for new and more relevant data. Another critical strategy involves regularly monitoring cache performance to identify bottlenecks and fine-tune cache configurations accordingly. By employing sophisticated monitoring tools, administrators can gain insights into cache hit rates and overall system performance, enabling data-driven decision-making for optimal caching strategies.

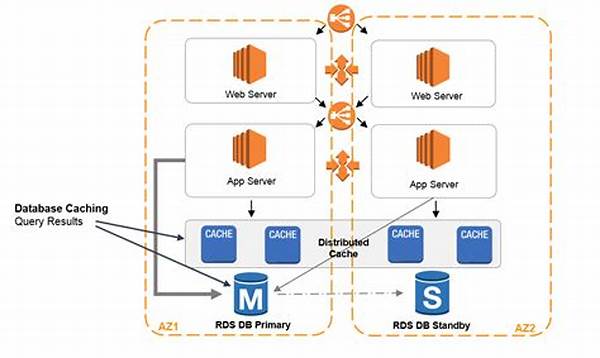

Furthermore, ensuring data consistency between the cache and the underlying database is paramount. Implementing validation and invalidation mechanisms helps maintain data integrity, ensuring that users always access the most up-to-date information. Employing a multi-layered caching approach, combining in-memory and distributed caches, can further enhance data accessibility and redundancy, particularly in distributed database environments. Collectively, these strategies form a comprehensive framework for effective cache management for databases, driving improved system performance and reliability.

Common Challenges in Cache Management for Databases

1. Data Consistency: Ensuring that cached data remains consistent with the underlying database is a critical aspect of cache management for databases.

2. Cache Invalidation: Determining the right time to invalidate or refresh cached data to maintain relevance without compromising performance.

3. Eviction Policies: Selecting appropriate eviction policies to manage cache space efficiently while minimizing cache misses and stale data removal.

4. Memory Constraints: Managing limited memory resources effectively to optimize cache size for maximum performance benefits.

Read Now : Chemical Reaction Simulation Techniques

5. Distributed Caching: Ensuring consistent and synchronized cache management across distributed database environments.

6. Scalability Issues: Adapting cache management strategies to accommodate growing databases and user demands without degrading performance.

7. Performance Monitoring: Continually monitoring cache performance to detect bottlenecks and optimize configurations for improved results.

8. Security Concerns: Implementing security measures to safeguard cached data against unauthorized access or tampering.

9. Latency Tuning: Fine-tuning cache configurations to minimize latency and optimize data retrieval times.

10. Cache Configuration Complexity: Navigating complex cache configuration settings to ensure compatibility with the overall database infrastructure.

Emerging Trends in Cache Management for Databases

As technology advances, cache management for databases continues to evolve, embracing new methodologies and innovations. One emerging trend is the integration of artificial intelligence (AI) and machine learning (ML) for dynamic cache optimization. By analyzing real-time data access patterns and user behaviors, AI-driven tools can adapt cache configurations autonomously to maximize performance and resource utilization.

Another significant trend is the increasing adoption of in-memory databases and caches. These solutions leverage high-speed memory for data storage and retrieval, providing near-instantaneous access to frequently requested information. In-memory technologies are particularly beneficial for applications that require ultra-low latency and high throughput. Furthermore, the rise of multi-cloud and hybrid architectures has introduced new complexities in cache management for databases.

These environments demand seamless cache synchronization and data consistency across diverse cloud platforms, necessitating sophisticated management tools and strategies. Additionally, the emphasis on data privacy and compliance has led to the development of more secure caching mechanisms that protect sensitive information while preserving performance gains. Collectively, these emerging trends signify a promising future for cache management, where intelligent, efficient, and secure caching solutions will be integral to database operations.

Conclusion: The Future of Cache Management for Databases

In conclusion, cache management for databases is poised to play an increasingly crucial role in the ever-expanding landscape of data-driven applications. With databases becoming more complex and user demands surging, efficient cache management is essential for sustainable growth. The future of cache management lies in innovative approaches that leverage AI and advanced analytics to automate caching decisions, optimize resource allocation, and enhance system performance.

Moreover, as databases continue to expand across multiple cloud environments, robust cache management solutions will be vital in ensuring data consistency and accessibility. Successfully implementing cache management strategies requires a balance between technological advancements and practical considerations, such as budget constraints and organizational goals. As organizations strive to deliver seamless data experiences, cache management for databases will remain a cornerstone of database optimization, continually evolving to meet the challenges and opportunities of the digital age.

Summary of Cache Management for Databases

Cache management for databases is an essential component of modern database optimization, driving performance enhancements, scalability gains, and resource efficiencies. By storing frequently accessed data in intermediary caches, this management strategy minimizes database load and reduces latency, ensuring swift data retrieval and improved user experiences. As databases grow in complexity, effective cache management becomes imperative for sustaining system performance and reliability.

Typically, a combination of well-structured eviction policies, continuous performance monitoring, and strategic data prioritization forms the foundation of a robust caching system. Furthermore, emerging trends such as AI-driven cache optimization and in-memory databases offer promising avenues for future advancements. By effectively addressing challenges like data consistency, memory constraints, and distributed caching, organizations can harness the full potential of cache management for databases, driving efficient database operations and maintaining competitive advantages in an increasingly data-centric world.