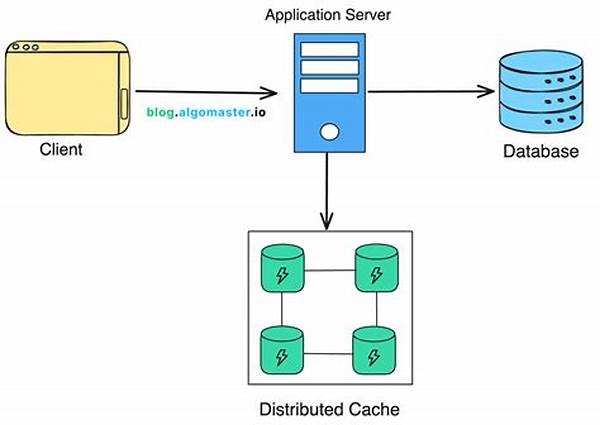

In contemporary computing environments, the role of distributed caches cannot be undermined, especially when performance and scalability are of paramount concern. Distributed caches provide a mechanism to store data temporarily across multiple nodes, ensuring quick data retrieval and reducing the load on central databases. The efficiency and effectiveness of distributed cache systems largely depend on the implementation techniques employed. Understanding and applying the right distributed cache implementation techniques can significantly enhance the overall system performance.

Read Now : Intelligent Decision-making Algorithms

Understanding Distributed Cache Implementation Techniques

Distributed cache implementation techniques are crucial in designing a system that efficiently manages cache operations across multiple nodes. These techniques ensure data consistency, availability, and partition tolerance in a distributed system. Notably, they address several challenges such as data replication, eviction policies, and fault tolerance. Distributed caching solutions often leverage these techniques to improve response times and ensure high availability. The chosen techniques impact data retrieval speeds as well as system resilience to node failures.

Ensuring seamless integration of distributed caches into existing architectures requires a deep understanding of these techniques. Common implementations include the use of hashing algorithms, which facilitate efficient data distribution across nodes. Additionally, managing cache coherence is critical to prevent stale data from being served during retrieval operations. Techniques such as write-behind caching and read-through or write-through operations are utilized to maintain data integrity and synchronicity.

A pivotal part of successful cache implementation involves choosing the right tools and frameworks. Solutions like Redis, Memcached, and Apache Ignite embody various distributed cache implementation techniques, offering features that enable elasticity and scalability. These tools often come with built-in support for distributed data structures, fault tolerance mechanisms, and data replication strategies, all of which are essential for a robust distributed cache system.

Key Benefits of Distributed Cache Implementation Techniques

1. Scalability Enhancement: Distributed cache implementation techniques play a critical role in scaling applications by distributing data across multiple nodes.

2. Reduced Time Complexity: With these techniques, data retrieval times are minimized as nodes replicate and store data based on demands.

3. Improved Reliability: Through replication and fault tolerance strategies, distributed caches ensure data availability even during node failures.

4. Consistency Management: Ensuring data consistency across a distributed cache system is essential, achieved through effective techniques like eventually consistent models.

5. Load Balancing: Implementation techniques contribute to effective load distribution across nodes, preventing any single point of failure in the system architecture.

Tactical Approach to Distributed Cache Implementation Techniques

When designing distributed systems, selecting appropriate distributed cache implementation techniques is vital for ensuring optimal performance and reliability. These techniques involve various strategies for data distribution, replication, and synchronization to meet the diverse needs of applications. By focusing on aspects like load distribution, latency reduction, and fault tolerance, developers can construct systems that perform efficiently in demanding environments.

A key aspect of distributed cache implementation techniques includes the integration of consistency models, such as strong or eventual consistency, determining how updates are processed across nodes. Further, techniques must address potential bottlenecks in cache synchronization, ensuring coherent data states throughout the system. The use of sharding, consistent hashing, and data partitioning provides the means to balance data loads effectively and improve query efficiency.

Moreover, distributed cache implementation techniques often leverage redundancy and failover mechanisms to maintain operational integrity. Techniques like multi-node replication, quorum-based reads, and writes, as well as leveraging cloud-native architectures, enhance cache resilience. By employing such advanced implementations, organizations can foster systems that not only meet performance benchmarks but also adapt to dynamic scalability demands.

Advanced Distributed Cache Implementation Strategies

1. Consistent Hashing: This technique allows for uniform data distribution by hashing keys to cache nodes, minimizing rehashing during scaling.

2. Data Partitioning: Dividing data into partitions helps manage large datasets, optimizing resource utilization.

3. Replication Strategies: Implementing multiple data replicas across nodes increases fault tolerance through redundancy.

4. Sharding Techniques: Distributing data based on key space sharding enhances retrieval speeds and balance.

Read Now : Emissions Data Analytics Techniques

5. Cache Coherence Protocols: Ensures data freshness and consistency across distributed caches by managing state synchronization.

6. Eviction Policies: Techniques like LRU and LFU define how least-used or oldest data entries are discarded, optimizing cache storage.

7. Read/Write Operations: Utilizing strategies like read-through, write-behind, or write-through for effective cache operation control.

8. Quorum-Based Models: Utilizing these for read and write operations ensures data integrity and availability across distributed nodes.

9. Cache Warming: Pre-loading caches with frequently accessed data reduces initial loading times, facilitating quicker responses.

10. Cross-Region Replication: Enhances global system availability and balance by replicating data across geographically distant regions.

Exploring Challenges in Distributed Cache Implementation Techniques

Implementing distributed cache implementation techniques presents several challenges that require strategic solutions. One major challenge lies in ensuring consistency across distributed nodes, particularly when dealing with high concurrency. Techniques need to be robust enough to handle race conditions and ensure data integrity. Another significant challenge is related to cache invalidation, where data needs to be consistently up-to-date. Techniques such as time-to-live (TTL) settings and cache invalidation protocols must be precisely managed to avoid serving stale data.

Furthermore, managing network latency and bandwidth consumption is critical in distributed cache systems. As data travels across nodes, latency can affect performance—distributed cache implementation techniques often employ proximity-based caching strategies to mitigate this. Additionally, maintaining fault tolerance in dynamic network environments is essential. Implementations must have resilient recovery paths and data backup to ensure availability even amid network disruptions or hardware failures.

Lastly, the challenge of scalability persists: accommodating growing data loads without degrading performance demands sophisticated scaling strategies, such as horizontal scaling and auto-scaling frameworks. By adopting adaptive distributed cache implementation techniques and utilizing system-wide monitoring, organizations can effectively address these challenges, achieving optimal performance and reliability in distributed caching environments.

Summary of Distributed Cache Implementation Techniques

The utilization of distributed cache implementation techniques allows modern computing systems to achieve rapid data retrieval and balanced load management. These techniques are central to empowering systems with the scalability necessary to accommodate burgeoning data demands. By incorporating strategies that ensure data consistency, reliability, and optimal cache usage, these techniques significantly enhance performance.

Despite the rewards, the implementation of distributed cache techniques is not without its complexities. Ensuring consistency, managing cache invalidation, and addressing latency issues present ongoing challenges. However, with effective strategies like sharding, replication, and fault tolerance mechanisms, these challenges can be surmounted. By leveraging the power of distributed cache systems, organizations can maintain cutting-edge infrastructure that excels under pressure.

In summary, distributed cache implementation techniques are indispensable to constructing high-performance, scalable architectures. As demand on systems increases, the need for refined caching strategies becomes more critical. By embracing innovative implementation techniques, enterprises can assure that their systems are well-positioned to meet the demands of ever-evolving computational landscapes, thereby securing advantages in speed, reliability, and efficiency.