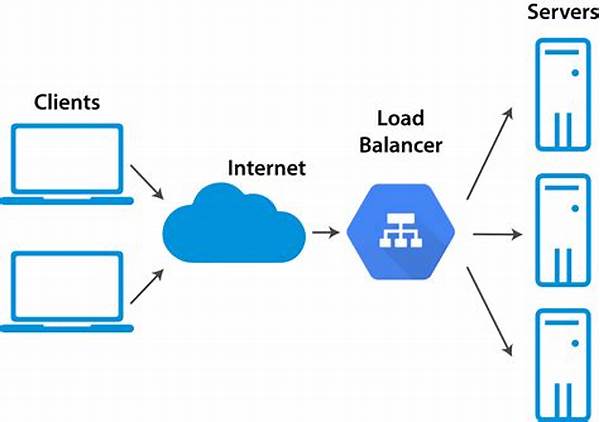

In contemporary software architecture, the deployment of Application Programming Interfaces (APIs) serves as a backbone for seamless communication among diverse systems. One of the critical aspects in ensuring the efficiency and reliability of these interactions is load balancing. Load balancing in API deployment refers to the strategic distribution of incoming network traffic across multiple servers or resources, ensuring scalability, fault tolerance, and enhanced performance. This process guarantees that no single server is overwhelmed with excessive requests, thereby maintaining optimal response times and improving user experience. In this context, load balancing contributes significantly to the stability and robustness of API deployments, which is essential for handling varying loads and demands.

Read Now : High-impact Academic Publication Trends

The Importance of Load Balancing in API Deployment

In the landscape of digital services, load balancing in API deployment is indispensable. First, it ensures high availability, distributing traffic to safeguard against server failure. Second, it optimizes resource utilization by distributing workloads evenly. Third, it enhances security by mitigating attacks such as Distributed Denial of Service (DDoS). Fourth, it permits scalability, enabling systems to handle increasing loads effectively. Lastly, it maintains consistent user experiences by reducing latency. As API interactions grow, effective load balancing strategies become pivotal, ensuring that APIs operate efficiently and resiliently under varying conditions and demands.

Techniques for Load Balancing in API Deployment

Load balancing in API deployment may employ several techniques to achieve optimal results. Various algorithms, such as round-robin, least connections, and IP hash, are implemented to distribute traffic efficiently. Round-robin assigns incoming requests cyclically, offering simplicity and fairness. Least connections prioritize servers with the fewest active requests, enhancing responsiveness. IP hash assigns requests based on client IP addresses, ensuring consistent access routes. Each of these strategies has its unique benefits and application appropriateness, contributing to flexible and adaptive load balancing solutions that meet different API infrastructure demands.

Challenges in Load Balancing for API Deployment

Understanding the Intricacies

The deployment of APIs poses distinct challenges that necessitate effective load balancing solutions. Firstly, achieving a seamless and dynamic adjustment to traffic patterns requires sophisticated algorithms and architecture. Secondly, latency must be minimized across globally distributed systems, ensuring swift responsiveness. Thirdly, handling rapid scaling demands becomes crucial when dealing with fluctuating workloads and peak usage. Fourthly, maintaining a robust security posture against new threats requires innovative load balancing mechanisms. Fifthly, achieving harmony between automated load balancing interventions and manual adjustments demands a detailed understanding of API workloads. Effective load balancing in API deployment addresses these challenges to ensure operational excellence.

Overcoming Challenges in Load Balancing Strategies

Addressing challenges in load balancing in API deployment is critical for maintaining smooth operation and high availability of services. It requires adaptive solutions that can manage high-volume traffic without degradation in service quality. Effective strategies must incorporate robust monitoring tools to identify traffic spikes and adjust resources accordingly. The choice of load balancing algorithm is crucial in distributing requests efficiently across the infrastructure. Implementing redundancy and failover strategies is also essential to prevent downtime during unexpected server failures. Load balancing in API deployment should also integrate with existing security measures to protect against potential threats. Ultimately, overcoming these challenges involves balancing technical solutions with strategic planning to ensure optimal performance and reliability.

Load Balancing Algorithms in API Deployment

The deployment of load balancing algorithms in API deployment is a cornerstone for achieving efficient resource allocation and system resilience. Round-robin, least connections, and IP hash are prominent algorithms that distribute traffic effectively across multiple servers. These algorithms facilitate proportionate request handling, reducing bottlenecks and preventing server overloads. Load balancing in API deployment ensures system stability by dynamically distributing workloads. Moreover, these algorithms are instrumental in achieving cost effectiveness by optimizing server usage and avoiding unnecessary expenditures. Careful selection and implementation of these algorithms based on specific deployment requirements are vital for maintaining optimal API performance.

Read Now : Citation Analysis Software Programs

Conclusion: The Necessity of Load Balancing in API Deployment

In summary, load balancing in API deployment is vital for ensuring the sustained and efficient operation of modern software systems. By distributing incoming requests across multiple servers, load balancing improves system reliability, enhances performance, and provides protection against failures. It is an integral component in achieving a scalable infrastructure capable of handling varying workloads. As the demand for APIs increases, implementing robust load balancing strategies becomes a necessity rather than a choice. Consequently, businesses must remain proactive in adopting and optimizing load balancing techniques to meet evolving API demands, thus guaranteeing uninterrupted service and enhanced user satisfaction.

Future Prospects of Load Balancing Solutions

Looking forward, the realm of load balancing in API deployment will likely continue to evolve, influenced by advancements in technology and rising demands for scalable and efficient API solutions. Emerging trends such as the integration of artificial intelligence and machine learning in load balancing algorithms promise even greater adaptability and performance optimization. Additionally, the development of edge computing and serverless architectures may introduce new paradigms in handling API traffic, further emphasizing the need for innovative load balancing strategies. Staying abreast with these advancements will ensure that organizations can handle the complexities of modern API deployments, safeguarding system resilience and maximizing operational efficacy.