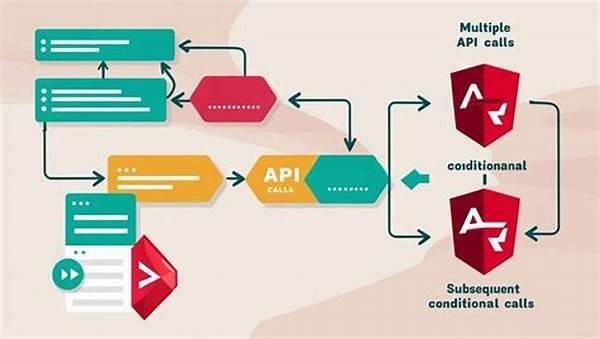

The execution of multiple API calls concurrently is a critical technique in modern software development, aiming to optimize performance and reduce the latency of web applications. This practice, often referred to as parallel API call execution tactics, enables a system to perform multiple operations simultaneously, leading to a more efficient utilization of resources. As businesses and applications continue to grow in scale, the demand for rapid data retrieval and processing has become paramount, necessitating the adoption of such tactics. Developers seeking to enhance the responsiveness and scalability of their applications should consider implementing parallel API call execution strategies as part of their development workflow.

Read Now : Modernizing Infrastructure Using Apis

Advantages of Parallel API Call Execution

Parallel API call execution tactics offer numerous benefits to developers and their applications. Primarily, they improve the performance of web applications by reducing the time taken to retrieve and process data. When multiple API calls are executed simultaneously, it is possible to utilize network resources more efficiently, leading to a faster response time. This is particularly beneficial in applications that require data from various sources, as it allows for a smoother and more seamless user experience.

Furthermore, parallel API call execution tactics help in balancing the load across different servers, thus reducing the risk of overloading a single server or endpoint. By distributing requests, applications can maintain stability and performance even during high traffic periods. Additionally, these tactics enable developers to implement more complex functionality without compromising on speed. As applications grow in complexity, adopting parallel API call execution tactics becomes essential for ensuring scalability and maintaining high standards of performance and user satisfaction.

Lastly, utilizing parallel API call execution tactics can lead to cost savings, particularly in cloud-based environments where resources are billed based on usage. By reducing the time and resources required to fetch data, businesses can lower their operational costs. These tactics are thus a valuable tool not only for improving application performance but also for optimizing resource expenditure.

Implementing Parallel API Call Execution Tactics

The concept of parallel API call execution tactics encompasses a variety of methods to achieve concurrency. One popular approach is to utilize asynchronous programming paradigms, which enable tasks to run independently without blocking the main execution thread. This can be achieved using promises, async/await syntax, or callback functions, depending on the programming language.

Another effective technique within parallel API call execution tactics is the use of multi-threading or multi-processing. By leveraging multiple threads or processes, applications can carry out multiple API calls concurrently, thereby speeding up data retrieval and processing. Load balancing strategies also play a crucial role in parallel API call execution by ensuring equal distribution of requests, which helps avoid server overload and maintain consistent performance.

Error handling is a critical aspect of parallel API call execution tactics. Developers must implement robust error handling mechanisms to manage failed requests and retries effectively. It is important to ensure that applications can gracefully recover from errors without affecting the overall user experience. Integrating comprehensive logging and monitoring systems is recommended to track API call performance and diagnose potential issues promptly.

Challenges in Parallel API Call Execution

Managing parallel API call execution tactics presents several challenges, notably in resource coordination and synchronization. As concurrent operations may lead to simultaneous access to shared resources, developers must employ appropriate locking mechanisms to prevent race conditions and data inconsistency.

Moreover, network latency can significantly impact the effectiveness of parallel API call execution tactics. Developers need to design their applications to handle varying latencies gracefully, ensuring that a delay in one API call does not adversely affect others. Timeout settings and retry logic are essential components in managing such scenarios, enabling the application to continue to operate smoothly despite network delays.

Best Practices for Parallel API Call Execution

Strategies for Effective Parallel API Call Execution

Resource optimization and execution efficiency form the core of successful parallel API call execution tactics. Developers must carefully design their application architecture to leverage these tactics effectively, ensuring that concurrency does not compromise performance or reliability. This demands a deep understanding of the application’s functional requirements and the behavior of the APIs involved. By implementing parallel API call execution strategies, developers can significantly enhance data processing speeds, resulting in faster load times and improved user interactivity.

Read Now : “innovative Tools For Api Protection”

In environments where multiple data sources need to be accessed concurrently, parallel API call execution tactics are indispensable. Asynchronous operations can be scheduled to fetch and process data from various endpoints simultaneously, thereby enabling applications to handle large volumes of requests with minimal delay. Developers should prioritize adopting suitable libraries and frameworks that support concurrency, thereby simplifying the complexity associated with managing multiple simultaneous API calls.

Parallel API call execution tactics extend beyond mere concurrent processing. They also involve employing sophisticated error handling and fallback mechanisms to ensure the resilience of applications. In scenarios where an API call fails, the application should be able to continue operation by using cached data or alternative endpoints. Developers should aim to build adaptive systems capable of adjusting to failure conditions seamlessly, thus maintaining seamless service delivery. This level of sophistication requires detailed planning and testing but offers significant benefits in terms of robustness and user satisfaction.

Troubleshooting Parallel API Call Execution

Executing parallel API call strategies can sometimes lead to complications necessitating in-depth troubleshooting. Developers often encounter issues such as race conditions, in which multiple threads or processes attempt to alter shared resources concurrently. Employing appropriate synchronization primitives can mitigate such challenges within parallel API call execution tactics, promoting data consistency and integrity.

Ensuring that server resources are not overwhelmed is another critical area requiring vigilance. When implementing parallel API call execution tactics, it is crucial to establish proper load balancing and implement back-pressure mechanisms to regulate request flows. Identifying bottlenecks, both at the network and server level, allows developers to adjust resource allocation and configuration settings dynamically.

Optimizing Parallel API Call Execution for Scalability

As the demands on software systems grow, optimizing parallel API call execution tactics for scalability becomes crucial. Carefully crafted strategies are required to allow applications to handle increased request volumes without degradation in performance. Developers must ensure that their systems can dynamically distribute loads, resize computing resources, and manage API call proliferation efficiently, using robust frameworks and reliable third-party solutions where necessary.

Moreover, designing applications with elasticity in mind directly impacts their capacity to adapt to changes in demand swiftly. Incorporating parallel API call execution tactics into the architecture design from the outset allows businesses to sustain growth, ensuring both superior performance and reliability as user demands expand. This necessitates a forward-thinking mindset and thorough testing frameworks to anticipate and adjust to future needs seamlessly.