Understanding Recurrent Neural Networks Architecture

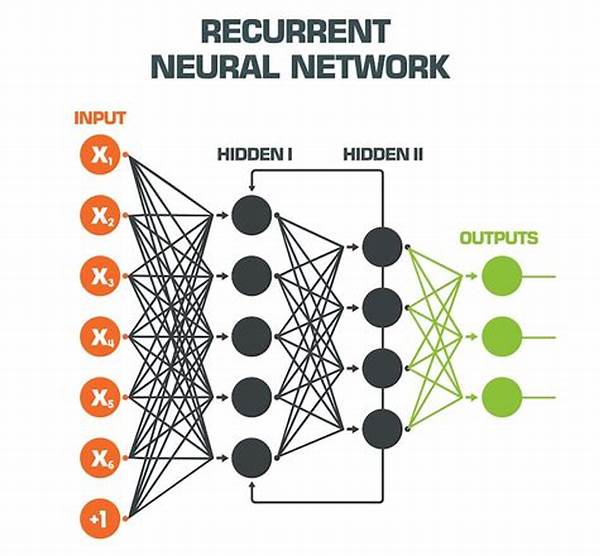

Recurrent neural networks (RNNs) architecture is an advanced configuration of artificial neural networks explicitly designed to handle sequential data. Unlike traditional feedforward neural networks, the recurrent neural networks architecture is characterized by its inherent ability to store past information, which it utilizes in processing new input sequences. This is achieved through the RNN’s loops or cycles within its architecture that allow data to persist across time steps. Consequently, RNNs are particularly effective in applications where the context from previous inputs is crucial, such as language modeling, speech recognition, and time-series prediction.

Read Now : Enhancing Business Through Api Implementation

The recurrent neural networks architecture is fundamentally based on hidden states, which serve as dynamic memories capturing the necessary historical information from previous computations. At each time step, the hidden state is updated based on both the current input and the previous hidden state, thus maintaining a sequence of historical data. Furthermore, gradient descent, a common optimization algorithm, is adapted in the context of RNNs to handle these sequential dependencies, although the architecture faces challenges such as vanishing and exploding gradients, which can complicate training over long sequences.

Moreover, advancements in the recurrent neural networks architecture have led to the development of several RNN variants like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRU). These have been created to mitigate the limitations inherent in standard RNN models by incorporating mechanisms to control the flow of information, thereby enhancing their capacity to learn long-term dependencies. As research in this field advances, recurrent neural networks architecture continues to evolve, contributing significantly to the state-of-the-art methodologies used for sequence-based learning tasks.

Key Characteristics of Recurrent Neural Networks Architecture

1. The recurrent neural networks architecture enables retention of past information through loops, making it proficient in handling time-dependent data.

2. Hidden states within the recurrent neural networks architecture store sequential contexts, crucial for understanding data sequences.

3. Gradient descent methodologies are adapted in the recurrent neural networks architecture to manage sequential dependencies effectively.

4. Challenges like vanishing and exploding gradients pose significant training hurdles in the recurrent neural networks architecture.

5. Variants such as LSTM and GRU are developed within the recurrent neural networks architecture to improve learning of long-term dependencies.

Evolution of Recurrent Neural Networks Architecture

The evolution of recurrent neural networks architecture has been significantly driven by the need to overcome its limitations and expand its capabilities. Conventional RNNs, despite their innovative mechanism of incorporating sequential memory, faced issues such as gradient vanishing. This problem restricted the range over which RNNs could reliably capture dependencies, hindering their applicability in sequences that require long-term dependency learning. The introduction of the Long Short-Term Memory (LSTM) networks marked a pivotal advancement, providing solutions to these shortcomings.

LSTM networks, with their distinctive cell state and gated structures, improved the recurrent neural networks architecture by enabling adaptive learning of when to remember or forget information. This breakthrough ensured that longer-term dependencies within the data could be learned more effectively, making LSTMs suitable for complex sequence prediction tasks. The Gated Recurrent Unit (GRU) further streamlined this approach, maintaining LSTM-like benefits while offering a simpler architecture that reduces computational complexity. The continual enhancement of recurrent neural networks architecture reflects ongoing research efforts and advancements in machine learning, promising substantial contributions to fields requiring sequence data processing.

Applications of Recurrent Neural Networks Architecture

1. Language Modeling: The recurrent neural networks architecture is crucial in predicting the likelihood of word sequences, enhancing language understanding.

2. Speech Recognition: Efficient in processing audio sequences, the recurrent neural networks architecture transforms spoken words into text.

3. Time-Series Prediction: Helps in forecasting future data points by analyzing trends from historical data patterns.

4. Sequence Prediction: The recurrent neural networks architecture predicts the next item in a sequence based on prior inputs.

Read Now : Interdisciplinary Research Collaborations

5. Machine Translation: Translates text from one language to another by capturing contextual sentence information.

6. Sentiment Analysis: Analyzes text data to determine sentiment polarity, relying on sequential word relationships.

7. Video Analysis: Assists in understanding video content by processing frame sequences for activity recognition.

8. Music Generation: Employs the recurrent neural networks architecture to create music compositions by learning musical sequences.

9. Bioinformatics: Analyzes genetic sequences by identifying patterns and relationships within DNA sequences.

10. Gaming AI: The architecture aids game development by predicting gamer movements and strategizing AI behavior.

Future Prospects of Recurrent Neural Networks Architecture

The future of recurrent neural networks architecture lies in its potential to adapt to increasingly complex machine-learning environments and applications. As technology progresses, enhancements in computational power and data availability have created opportunities for more sophisticated RNNs. Research is actively focused on developing hybrid models that integrate the conceptual strengths of recurrent neural networks architecture with other architectures like convolutional neural networks (CNNs) and transformers. Such integrative models harness the strengths of each architecture to produce more robust and accurate predictions, particularly in areas requiring nuanced understanding, such as natural language processing and computer vision.

Additionally, the future advances in recurrent neural networks architecture will likely emphasize overcoming the existing limitations pertaining to training difficulties and resource inefficiencies. Adoption of new optimization techniques, better architectures like attention mechanisms, and model compression strategies are of particular interest. Attention mechanisms, for instance, have already shown considerable promise in improving sequence modeling tasks by focusing selectively on relevant parts of input data, significantly enhancing RNN performance. As the field evolves, recurrent neural networks architecture continues to hold promise for revolutionizing sequence data processing across industries and embedding intelligence in unprecedented ways.

Challenges in Recurrent Neural Networks Architecture

Despite the extensive advantages offered by recurrent neural networks architecture, several challenges persist that require addressing to enhance its effectiveness. A primary challenge involves the computational complexity associated with training RNN models, which can be resource-intensive. This complexity arises due to the recurrent nature of the architecture that involves maintaining interdependencies across time steps, thus leading to increased computational burdens and longer training times. Optimizing the training process, therefore, remains a core focus area, as it can significantly impact the performance and applicability of recurrent neural networks in real-time applications.

Moreover, while recurrent neural networks architecture is adept at processing sequential data, it faces significant challenges when dealing with longer sequences due to issues like gradient vanishing and exploding. These problems often lead to the deterioration of learning performance as the network struggles to update weights effectively. Therefore, mitigating these challenges through advanced algorithmic solutions or architectural modifications is integral for the future advancement of recurrent neural networks. Furthermore, scalability, model interpretability, and the ability to generalize across diverse datasets also remain critical focal points in the ongoing development of recurrent neural networks architecture.