In the ever-evolving field of artificial intelligence, transfer learning for neural networks has emerged as a pivotal technique, enabling models to leverage pre-existing knowledge to tackle new tasks efficiently. The essence of transfer learning lies in utilizing pre-trained neural network models, tailoring them to solve novel problems with minimal adjustments, thereby reducing the time and resources required for training. This article delves into the intricacies of transfer learning, highlighting its applications, benefits, and implications in contemporary AI research and deployment.

Read Now : Climate Variability And Drought Detection

The Fundamentals of Transfer Learning for Neural Networks

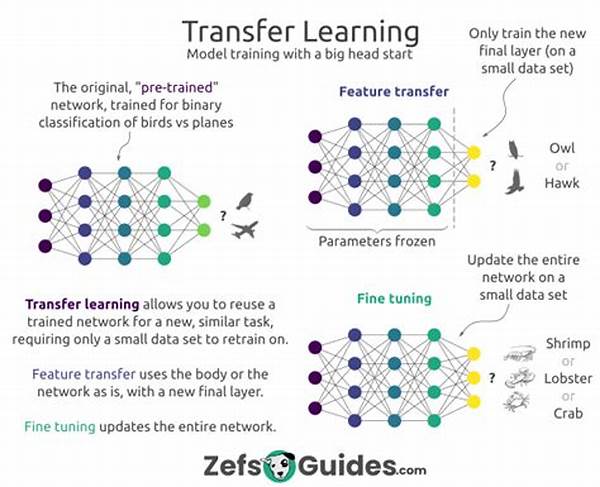

Transfer learning for neural networks involves the adaptation of a pre-trained model to a new, related task. This approach significantly curtails the need for extensive computational resources and large datasets, which are often prerequisites for training neural networks from scratch. The primary mechanism involves fine-tuning the weights of a pre-existing neural network, thereby repurposing its accumulated knowledge. This technique finds extensive application in various domains such as image recognition, natural language processing, and beyond, where it enhances the model’s ability to generalize across tasks with similar characteristics.

The implementation of transfer learning for neural networks can be broken down into several stages. Initially, a model is trained on a large, diverse dataset to develop a wide-ranging understanding of the feature space. Subsequently, this pre-trained model is refined using a smaller dataset that pertains to the specific task in question. The adaptability of this method is underscored by its efficiency in scenarios with limited data, allowing for faster deployment and increased accessibility to cutting-edge AI solutions.

Key Components of Transfer Learning for Neural Networks

1. Pre-trained Models: Utilized as the foundation, enabling models to skip the burdensome initial training phase.

2. Fine-tuning: Adjusting model parameters for optimal performance on a specific dataset or task.

3. Feature Extraction: Leveraging learned features to improve accuracy and efficiency on new tasks.

4. Domain Adaptation: Adapting models to different domains while maintaining core learned features.

5. Faster Training: Reduces time and computational costs, facilitating quicker model deployment.

Advantages of Transfer Learning for Neural Networks

The utilization of transfer learning for neural networks presents numerous advantages. Primarily, it enables the efficient repurposing of models, vastly reducing the time necessary for training new models from scratch. This translates into cost savings and accelerated deployment timelines, critical factors in fast-paced commercial environments. Moreover, transfer learning enhances model accuracy by exploiting pre-trained models that have already captured complex patterns from vast datasets, which might be unattainable from scratch given limited data.

Furthermore, transfer learning for neural networks promotes effective learning in scenarios with constrained datasets. By leveraging knowledge from related tasks or domains, models can attain high performance without the requisite large data volumes typically needed. Additionally, it fosters innovation by democratizing access to state-of-the-art models for a wider audience, thereby facilitating cutting-edge advancements across diverse fields such as healthcare, finance, and autonomous systems. This technique ultimately bridges the gap between sophisticated AI capabilities and practical, real-world applications.

Implementing Transfer Learning for Neural Network Applications

Transfer learning for neural networks facilitates the optimization of AI solutions across various industry applications. In practice, this involves selecting an appropriate pre-trained model whose domain aligns closely with the task at hand. The next step entails fine-tuning this model using task-specific data, often a fraction of what would traditionally be required, ensuring that the model is tailored to its new application while maintaining core competencies acquired during initial training.

The efficacy of transfer learning extends to numerous domains. In image classification, models trained on extensive datasets such as ImageNet are fine-tuned to identify specific categories, minimizing training duration while maintaining high accuracy. Similarly, in natural language processing, pre-trained language models like BERT are adapted to comprehend context-specific language nuances, thereby enhancing their relevance and applicability to diverse linguistic tasks. Such implementations underscore the versatile and adaptive nature of transfer learning, reinforcing its position as a cornerstone in modern AI development.

Read Now : Enhancing Api Throughput Capacity

Maximizing the Potential of Transfer Learning for Neural Networks

The potential of transfer learning for neural networks is vast, offering a pragmatic solution to the innate challenges of traditional AI training methodologies. By capitalizing on pre-trained models, developers can circumvent data scarcity and computational load issues, unlocking new possibilities for innovation. One significant benefit lies in the rapid prototyping and deployment of AI models, which is essential for maintaining competitiveness in dynamic markets.

Nevertheless, optimal results via transfer learning require strategic execution. Selecting an appropriate base model is crucial, as it must encompass the requisite features to effectively transfer knowledge to the new task. Moreover, careful calibration of hyperparameters during fine-tuning can greatly influence model performance, emphasizing the need for domain expertise. As research continues to advance, the methodologies underlying transfer learning for neural networks are expected to evolve, facilitating even greater precision and effectiveness in AI applications.

Challenges in Transfer Learning for Neural Networks

1. Model Selection: Choosing the right pre-trained model is vital for effective knowledge transfer.

2. Domain Mismatch: Discrepancies between source and target domains can impede transfer efficiency.

3. Overfitting Risks: Fine-tuning with small datasets may lead to overfitting, requiring careful consideration.

4. Hyperparameter Sensitivity: Fine-tuning demands precise hyperparameter calibration to achieve optimal results.

5. Resource Dependency: Efforts to accommodate resource constraints without sacrificing performance can be challenging.

Future Prospects of Transfer Learning for Neural Networks

Transfer learning for neural networks is poised for remarkable advancements, heralding new frontiers in AI. Emerging techniques promise to extend transfer learning’s applicability, enhancing the scalability and adaptability of AI models across diverse sectors. As the landscape evolves, the development of more sophisticated algorithms will further refine the balance between computational efficiency and model precision, paving the way for ubiquitous AI integration in everyday applications.